System Under Development Audit of the Initiation and Planning Phases of the Email Transformation Initiative

Audit Report

Office of Audit and Evaluation

September 2015

Table of contents

Detailed Findings and Recommendations

- The ETI governance structure

- The baseline costs

- Project ownership

- Critical path items

- poPartner organizations

- The resource model

- The risk management process

- SSC’s relationship with the vendor

Management response and action plans

Annex B: Summary of Predictive Project Analytics Results

System Under Development Audit of the Initiation and Planning Phases of the Email Transformation Initiative

© Her Majesty the Queen in Right of Canada, as represented by the Minister responsible for

Shared Services Canada, 2015

Cat. No. P118-2/2015E-PDF

ISSN 978-0-660-03516-1

System Under Development Audit of the Initiation and Planning Phases of the Email Transformation Initiative

System Under Development Audit of the Initiation and Planning Phases of the Email Transformation Initiative

(PDF Version, 429 KB)

Free PDF download available

Executive Summary

What we examined

Through the Email Transformation Initiative (ETI), Shared Services Canada (SSC) was working with their 43 partner organizations, the selected outsourced third party vendor (the vendor), and other key stakeholders to establish a modern email platform that would meet the Government of Canada’s emerging requirements. The email platform was intended to be fully implemented by the end of the fiscal year 2014–2015.

This audit provides assurance to the President of SSC and the Departmental Audit and Evaluation Committee that SSC implemented the appropriate project management and business process controls related to the initiation and planning phases of the ETI. The contract with the vendor was signed, and the project moved to the execution phase on July 1, 2013. Given the timing of the audit, the scope of the audit also included aspects of the execution phase of the ETI up to January 31, 2014. Note that with the transition to the execution phase, responsibility for the project passed from the Senior Assistant Deputy Minister (SADM), Transformation, Service Strategy and Design to the SADM, Project and Client Relationships Branch.

Why it is important

As a new organization, SSC continued to evolve its internal structure and processes as well as its relationship with its partner organizations. At the same time, SSC was responsible for the consolidation and transformation of the federal government’s information technology (IT) infrastructure, with the ETI being a first of its kind IT transformation project across the federal government. An audit of the ETI’s initiation and planning phases not only assisted in the success of the ETI, but provided lessons learned that could be applied to the next wave of transformation projects.

What we found

Throughout the audit fieldwork, the audit team observed examples of how controls were properly designed and were being applied effectively by SSC. This resulted in several positive findings which are listed below:

- A governance structure for the ETI was developed, that considered the roles and responsibilities of SSC senior management, central agencies (e.g. Treasury Board of Canada Secretariat [TBS]), partner organizations and the vendor;

- There was a dedicated and experienced project manager and supporting team;

- An independent review of the ETI was completed in June 2013 as part of the TBS Chief Information Officer Branch Gate 3 approval process, in which the Project Team played an active role in responding to, and implementing its recommendations. SSC also conducted lessons learned sessions related to the initiation and planning phases of the ETI; and

- SSC extensively engaged partner organizations to provide information on the ETI, and developed, at a high level, an awareness and transition strategy.

The audit also identified opportunities where management practices and processes could be enhanced. The following are findings where opportunities for improvement were identified and should be addressed by the SSC management:

- Gaps existed in the governance structure related to how key decisions were made in an informed and timely manner;

- The baseline cost numbers used in the business case for the implementation of the ETI required additional validation and documentation;

- Although the service authorization date (i.e. the availability of the new email solution for the deployment within partner organizations) had not changed, key critical path items required to ensure a successful project implementation continued to be delayed;

- Partner organizations may not be ready for the ETI implementation;

- The resource model for the project included gaps related to the integrated planning and use of enterprise resources for the project;

- The risk management process had gaps related to the capture and validation of assumptions, as well as risk mitigation plans for identified risks; and

- The contract with the vendor had penalties associated with the vendor not meeting deadlines. These penalties were modest in comparison the overall contract and when compared to penalties in contracts of benchmarked successful projects of similar complexity. Furthermore, SSC had yet to develop a formal vendor management program.

Yves Genest

Chief Audit and Evaluation Executive

Background

- The Government of Canada created Shared Services Canada (SSC) on August 4, 2011, to consolidate, streamline and improve information technology (IT) infrastructure services, dedicated to excellence in the delivery of email, data centre and network services across the federal government. SSC’s mandate was to leverage economies of scale across the federal government, so that all federal organizations have access to reliable, efficient, and secure IT infrastructure services, on a cost-recovery basis.

- There were 63 different email systems used by over 300,000 government employees within the 43 partner organizations for which SSC provided services. SSC was working with its 43 partner organizations and key stakeholders to establish a modern email platform that will meet the Government of Canada’s emerging requirements. The Email Transformation Initiative (ETI) was intended to implement a solution that would:

- be more cost-effective;

- improve workplace efficiency and productivity in the public service;

- end waste and reduce duplication;

- make it easier for Canadians to communicate with government; and

- raise the email security profile to better deal with cyber threats.

- The email platform was intended to be fully implemented by the end of the fiscal year 2014–2015. The cost savings that were projected in relation to the deployment of the ETI were to be reflected in SSC’s operating budget beginning April 2015.

- SSC selected one supplier as the prime outsourced third party vendor to implement the email solution. Individual partner organizations were accountable for ensuring that their desktop and business applications could interface with the new email solution. SSC was to provide standard interfaces for application integration for various application platforms.

- As of January 31, 2014, project plans were to have a small group of early adopters from within SSC to begin using the new email solution in January 2014. The initial implementation (Wave 0) was intended to occur in March 2014, and consisted of SSC employee mailboxes that resided on the Public Works and Government Services Canada infrastructure (approximately 2,800 employees). The 43 partner organizations were included within one of 3 waves, with Wave 1 beginning in March 2014, Wave 2 by September 30, 2014, and Wave 3 by March 31, 2015.

- As a new organization, SSC continued to evolve its internal structure and processes as well as its relationship with its partner organizations. Challenges continued to exist to ensure alignment between SSC and partner organizations as SSC undertook initiatives related to the consolidation and transformation of the federal government’s IT infrastructure. This was especially true for a first of its kind IT transformation project across the federal government such as the ETI, as SSC required the cooperation of partner organizations in order to ensure the successful deployment of the solution.

- A further challenge for the ETI related to the Treasury Board of Canada Secretariat’s (TBS) Chief Information Officer Branch (CIOB) project management framework and gating process. Although followed by the ETI, the framework developed by TBS did not contemplate requirements for projects with a significant outsourced component such as the ETI.

- Furthermore, the ETI project was required to ensure the appropriate application of the federal government’s new Security Assessment and Authorization (SA&A) process. In November 2012, Communications Security Establishment Canada published Information Technology Security Guidance 33 (Overview of IT Security Risk Management: A Lifecycle Approach), which replaced the former guidance on the Government of Canada IT security risk management process and the certification and accreditation process with SA&A. The purpose of the SA&A process was to ensure the appropriate security controls were selected, implemented, assessed and approved as part of the system development life cycle. Use of the new SA&A process provided additional challenges as the ETI was the first major project in which SSC applied these significant security processes and requirements, and furthermore had to convey these requirements to the vendor.

Objective and Scope

- The objective of the System Under Development Audit of the initiation and planning phases of the ETI was to:

- Provide management with an independent assessment of the progress, quality and attainment of the ETI objectives at defined milestones within the project/program; and

- Provide management with an evaluation of the internal controls of proposed business processes at a point in the development cycle where enhancements could be easily implemented and processes adapted. As the audit was on the planning and initiation phases of an outsourced solution being provided to partner organizations, the focus was on project management as opposed to proposed business processes. Note that the key business processes in this context were related to the management of the vendor, which was assessed as part of the audit.

- Given the timing of the audit, the scope of the audit also included aspects of the execution phase of the ETI up to January 31, 2014.

Methodology

- The audit approach was adapted to include the use of Deloitte’s project risk management methodology based on a quantitative analytical tool called Predictive Project Analytics (PPA) (refer to Annex B). PPA was a project risk management methodology based on a quantitative analytical engine. By assessing select project details, a project could be analysed against other successful projects in the PPA database with similar complexity characteristics. The predictive analytic database contained detailed information on over 2,000 successful projects.

- As an initial step, the complexity of the ETI was determined by profiling the project against a predefined set of complexity factors.

- Based on project management standards and leading practices, PPA identified 172 individual project management factors that have been grouped into specific audit criteria, which was the basis for this audit report. Based on the assessed project complexity for the ETI, and a comparison to successful projects of similar complexity, the PPA engine determined the expected level of control / performance across each of these 172 individual project management factors.

- Audit procedures were carried out to determine the actual level of control for the project for these project management factors (or in a handful of cases, project management factors were excluded if not relevant) and compared to the expected level of control, in order to conclude on the current effectiveness of project controls against the audit criteria.

Statement of Assurance

- Sufficient and appropriate procedures were performed and evidence was gathered to support the accuracy of the audit conclusion. The audit findings and conclusion were based on a comparison of the conditions that existed as of the date of the audit, against established criteria that were agreed upon with management. This engagement was conducted in accordance with the Internal Auditing Standards for the Government of Canada and the International Standards for the Professional Practice of Internal Auditing. A practice inspection has not been conducted.

Detailed Findings and Recommendations

The ETI Execution Phase Governance Structure

- We expected the ETI project governance structure to ensure that informed decisions were made, in the correct timeframes, by the appropriate individuals or groups, to ensure the success of the project during the initiation and planning phases.

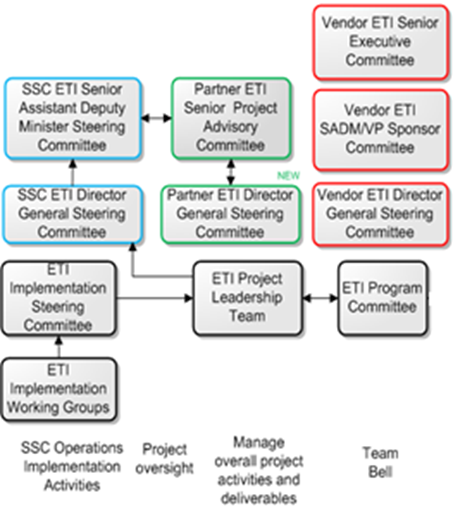

- The ETI governance structure was updated in response to an independent review that was completed in June 2013, as part of the TBS Gate 3 approval process. We found that a comprehensive governance structure for the ETI project execution phase was developed that considered the roles and responsibilities of SSC senior management, central agencies, partner organizations, and the selected vendor for the ETI (refer to Annex C). For example, there were several levels of joint governance bodies between SSC and the selected vendor. This included an executive committee with ultimate project oversight that consisted of the President of SSC, the Chief Operating Officer (COO), a number of Senior Assistant Deputy Ministers (SADM), the vendor’s President, a number of Senior Vice Presidents and Vice Presidents.

- Governance was also provided through organization-wide governance bodies such as the Senior Project and Procurement Oversight Committee that provided organizational oversight over all SSC projects.

- A key decision-making body for this project was the SADM ETI Committee, which was comprised of the SSC SADMs and the core project management team. The decision-making authority section in the Terms of Reference for the Committee indicated that the Committee was meant to review enterprise level issues that could affect the scope, cost or risks of the ETI project and to provide direction and decision-making.

- Although the SADM ETI Committee Terms of Reference indicated that the Committee was intended to meet monthly, interviews and meeting artifact evidence reviewed indicated that the frequency was expected to be bi-weekly; however, meetings had been less frequent. We found the Committee met only four times between October 2013 and January 2014 (October 2, 2013; October 31, 2013; November 18, 2013; and January 24, 2014).

- Given the length of time between SADM ETI Committee meetings, we found that when the Committee did meet, there was often an overload of information to review in the limited time (the Committee met for only one hour at a time). For example, we found the presentation for the meeting on January 24, 2014, was over 60 pages in length, and there were a number of decisions to be made by the Committee, some of which were postponed. Furthermore, there was insufficient time for committee members to review material before the meetings. Often the meetings were more of an information presentation. We found the agenda for the October 31, 2013 meeting indicated that all items were only presented for information.

- As of January 31, 2014, there was no ETI specific Director General (DG) level committee that was actively involved in the ETI governance structure that reported to the SADM ETI Committee.

- Within the Project Team governance, there was an ETI Implementation Steering Committee that had a reporting relationship with the Project Director. Members of the Committee were Senior Directors from Transformation Service Strategy and Design (TSSD), Projects and Client Relationships Branch (PCR) and Operations Branch with responsibilities including security, service management and email. The Committee was meant to review requirements for SSC-provided services and to provide direction and to address risks and issues. Through interviews, Committee members indicated that meetings were more of a status update and forum for the project to brief members of the Committee and did not allow for input.

- Given the current governance of the project, there was an increased risk that decisions related to the ETI were not made in an informed or timely manner thereby impacting the pace or quality of the overall implementation of the ETI. As a result, key stakeholders within SSC, such as DG level personnel who support the current email solution and will support the new ETI solution going forward, may not have visibility into the project given their limited role in active project governance.

Recommendation 1

The Assistant Deputy Minister, Networks and End User should ensure the Senior Assistant Deputy Minister Email Transformation Initiative Committee meets at least monthly during the critical phase of the project prior to the deployment of the solution, for key decisions related to scope, cost or risks. A Director General level committee should be used to analyse and potentially approve some project decisions.

Management response:

SSC management agrees with this recommendation. Meetings are scheduled on a bi-weekly and monthly basis, at different management levels, to review the deployment of the ETI solution and associated project decisions related to scope, cost and risks.

The Baseline Costs

- The ETI Business Case and the Project Charter indicated an estimated annual savings of $49.9 million (plus the annual $6 million Economic Action Plan savings) would be derived from the ETI once the solution was fully implemented by March 2015.

- The baseline costs and savings to be achieved through the implementation of the ETI were pulled together with 2009–2010 data that was provided by TBS before the creation of SSC. There were constraints and assumptions related to the information, as far as defining and comparing baseline costs for email between departments. While there was an awareness of the calculations and assumptions used in some of the calculations, a formal audit trail including documentation of assumptions and constraints did not exist.

- The independent review that was completed in June 2013 as part of the Gate 3 approval process indicated that, related to benefits management, “once overall enterprise implementation costs and operating costs are understood, SSC may want to revisit the original business case (taking into account benchmarking of current performance) to validate the business case and provide a basis for on-going project decisions.” SSC partially agreed with this recommendation, although it did not explicitly indicate a plan to revisit and more formally document the baseline costs which were required in order to measure the expected savings.

- Future potential audits on the ETI may question the actual savings achieved as a result of the ETI solution if the assumptions and calculations used for the baseline costs are not captured while the knowledge remains within SSC.

Recommendation 2

The Assistant Deputy Minister, Networks and End User should revisit the assumptions used in the calculation of the baseline costs and ensure a formal audit trail is retained.

Management response:

SSC management agrees with this recommendation. A rebaseline exercise of all transformation programs, including email, occurred in the fall of 2014. Rebaselined costs will be approved by the COO and the President of SSC.

Project Ownership

- We expected management to provide an appropriate level of organizational ownership and project direction, including the clarity of objectives, to ensure proper alignment with the organizational strategy.

- The ETI project was a key transformation initiative for SSC. We found that the ETI Project Sponsors were identified as the SADM TSSD and the SADM PCR. The ETI Business Case and Project Charter outlined expectations related to the delivery and outcomes related to the ETI. Senior management had been integrated into the governance structure and oversight of the project to ensure the project direction was maintained. Success for the project could ultimately be measured in the successful implementation of the ETI solution.

Critical Path Items

- We expected appropriate controls to be implemented to ensure the project could deliver against its objectives, timelines and budgets through project plans and schedules during the initiation and planning phases of the project. This included ensuring appropriate mechanisms were implemented to ensure due diligence would be conducted prior to the new email solution being deployed within partner organizations

- The ETI was being implemented according to the SSC Project Governance Framework, with baseline requirements captured up front and articulated in the Project Charter and in the contract with the vendor. The Project Team indicated that overall requirements for the project had not changed, although there was an expectation gap between the vendor and SSC related to security requirements and deployment plans.

- Issues related to the project were being managed but were being resolved late, or had yet to be resolved. Issues related to the timing and quality of vendor deliverables had caused an initial 12 week delay and there have been subsequent delays related to SA&A approval. SSC and the vendor continued to work through the acceptance of deliverables. The contract with the vendor stipulated that the vendor would not receive any payments until specific milestones were met, and failure to meet these milestones would result in payment credits. During the scope of this audit, no payments were made to the vendor and no credits were received by SSC.

- As of January 31, 2014, key documents had yet to be finalized, such as the Functional Requirements Traceability Matrix, which traces the functional requirements outlined for the ETI to the design specifications of the build phase, and ultimately the test cases used to ensure the design functions in a manner consistent with the initial requirements. The Security Requirements Traceability Matrix had also not been finalized, which was a critical document for the overall SA&A process and approval. Overall, as of January 31, 2014, the Project Team indicated there was an extensive amount of review and effort left related to the completion and approval of SA&A documentation for the ETI.

- Although the date for service authorization remained March 31, 2014, dates related to key critical path items required before service authorization continued to slip. SA&A Gate 3 approval was scheduled for March 31, 2014, at the same time as service authorization; however, SSC acceptance testing was scheduled to be finalized on April 28, 2014. Furthermore Wave 0 was expected to be completed by April 25, 2014, before final acceptance testing.

- Although the program management plan was required within 60 days of contract award (June 25, 2013) by the vendor, it had yet to be finalized. This plan was intended to provide an overview of the management of the ETI program (email service). The transition plan related to the detailed activities required for partners to prepare and deploy to the new email solution was originally expected to be completed by November 26, 2013, and as of January 31, 2014, was noted in status reports as "to be determined".

- The ETI was the first of its kind transformation project for the federal government and also involved a large outsourcing relationship. Given this, lessons have been learned by SSC that have impacted the timing of the project, this included:

- issues with connectivity to the vendor data centres;

- implementation of security requirements;

- the change in how email will be migrated;

- slower than expected sorting out and formalizing of documentation; and

- aggressive project timelines.

- Given the current timing and state of deliverables, there was a risk that the tight timelines may result in shortcuts being taken or mistakes being made, especially if there was not a formal due diligence process related to ensuring the development and acceptance of required project documentation was incorporated into go/no go criteria and approval for service authorization.

Recommendation 3

The Assistant Deputy Minister, Networks and End User should ensure the development of consolidated criteria for the go/no go decision for authorization and deployment.

Management response:

SSC management agrees with this recommendation. A Service Authorization process has been put in place to ensure that go/no go consolidated criteria are based on the following five lenses: Business Alignment, Architecture, Cyber and Information Technology Security, Operations, and Communications.

The Service Authorization process includes steps to review these criteria by the Service Review Sub-Committee (SRSC). Based on SRSC reviews a go/no go decision is recommended to the COO for authorization and deployment of each service release.

po1Partner Organizations

- The independent review that was completed in June 2013, as part of the Gate 3 approval process, indicated that SSC should document the stakeholder engagement plan (including engagement governance) and accelerate the engagement of key stakeholders within partner organizations. It indicated that key areas of the engagement should include implementation and operational resource requirements, as well as organizational change management. SSC agreed with the recommendation of the independent review and had extensively engaged partner organizations on providing information on the ETI project.

- It was noted that although SSC could engage partner organizations, it was ultimately the responsibility of the partner organizations to ensure they were ready for the migration to the new email solution. SSC was required to understand the level of readiness and potential issues related to readiness that may need to be addressed. There had been less of an information flow from partner organizations to SSC in terms of SSC understanding partner readiness. Surveys of partner organizations were conducted by SSC during the initiation phase of the project to obtain a preliminary view of each partner’s readiness in order to determine wave placement, but further formalized surveys or information gathering activities had not been subsequently carried out in relation to readiness. For instance, key performance indicators related to partner readiness had been developed but as of January 31, 2014, had yet to be completed or reported on for partner organizations. There was no formalized process to develop benchmarks that outline the expected level of readiness for partner organizations at different stages of the ETI project lifecycle. A process would have made it easier to track which of the partner organizations may have been falling behind. When identified, SSC could have provided assistance or guidance to those partner organizations.

- SSC was collecting weekly partner status summaries. For the week of December 20, 2013, the status summary indicated that several issues or requests for Wave 1 partners had yet to be addressed. Several partner organizations raised concerns related to the timing and availability of resources and documents needed to ensure their ability to deploy the solution.

- Partner organizations may not have enough specific information to understand the level of effort and timing required to ensure they were prepared for the roll out of the email solution. There was no integrated work plan for partner organizations, and there was no evidence that partner organizations had fully integrated the ETI into their planning. The development of communications and change management material had been very late in the project due both to issues with the quality and timing of vendor deliverables and changes in user engagement. For instance, given issues with the email migration method tested by the vendor, a decision to change the data migration method by the Project Team had only recently been made. As of January 31, 2014, draft communication and change management material was just going through the approval phase. The Wave 1 transition documentation outlining the activities required of partners for the implementation of the ETI were still undergoing revisions by the vendor.

- SSC as a department was deploying the ETI during Wave 0, and lessons learned by SSC with respect to the deployment of the ETI were expected to be shared with the other partner organizations; however, there was a very short timeframe between Wave 0 and Wave 1 in relation to the deployment preparation activities required of partners.

- Partner organizations may not be ready to deploy the solution, causing delays in the overall implementation of the solution, leading to both a reputational risk to SSC as well as a risk of potentially not realizing the savings that have been projected and already assumed to be taken from SSC’s operating budget beginning April 2015.

Recommendation 4

The Assistant Deputy Minister, Networks and End User should develop more systematic approach to partner readiness, with the expected level of partner organization readiness tied to the migration schedule, and an escalation process when partner organizations have not met the expected level of readiness.

Management response:

SSC management agrees with this recommendation. ETI project has developed a more systematic approach to partner readiness and has put in place a process to gather information from all stakeholders including partners, SSC and Team Bell. Regular meetings between Project Integration and Implementation Project Managers (PM) and ETI Partner PMs occur on a regular basis to ensure partner readiness. A monthly Partner Readiness Dashboard has been implemented as a systematic approach to monitor partner organization readiness tied to each project stage. In January 2014, an escalation process was developed in order to identify partners that have not met expected level of readiness. Risk mitigation action plans have been developed to resolve implementation issues.

Recommendation 5

The Assistant Deputy Minister, Networks and End User should ensure those lessons learned by SSC through the Wave 0 deployment, including the integration of applications and the resource requirements of deployment, are quickly communicated to Wave 1 partners. This needs to be supported by change management and communication material.

SSC management agrees with this recommendation. Lessons learned, volume of incidents, and responses to implementation surveys for Wave 0 have been gathered and are beginning to be communicated and shared with Wave 1 partner organizations in order to help provide them with a smooth transition to the new email service.

The Resource Model

- We expected to find a framework in place to ensure relevant business units provided adequate support to the project to support its effective delivery.

- We found that, although senior management was aligned on the overall approach and outcome of the project, the implementation of a matrix model was challenging. Coordination and resource allocation between business units and the Project Team was difficult because of their different perspectives on the level of effort and timing of tasks required to complete the project.

- We also expected that the roles and responsibilities for the ETI project had been clearly defined within SSC, and supported by a defined resource model, which included an integrated plan for engagement and use of resources across the enterprise.

- We found that a resource gap had been an issue since the outset of the project. The Project Team had anticipated a potential gap, and was filling it with contract resources and resources from the Operations Branch.

- The ETI was being implemented according to the SSC Project Governance Framework, which the ETI project management described as challenging given its current structure. TSSD took the lead role for the initiation and planning phases. PCR will take the lead for the project execution and deployment (i.e. Stage 4 and 5). Eventually the project will become a program run by the Operations Branch.

- The independent review that was completed in June 2013, as part of the TBS CIOB Gate 3 approval process, indicated that due to the uniqueness of this project, SSC should consider additional resource continuity throughout its lifecycle. SSC partially agreed with the recommendation and indicated that senior members from TSSD would remain on governance committees for the remainder of the project. However, key stakeholders from other areas of the enterprise, such as the Operations Branch, were not specifically identified in SSC’s response as having a formal role through the early phases of the project.

- Mechanisms had not been developed to ensure there was an integrated and enterprise wide approach to ensure the effective utilization of resources for the project across SSC throughout the lifecycle of a project, including those resources that are supporting the current email solution. Furthermore, an integrated project plan that included resources from across the enterprise was not yet finalized and approved as of January 31, 2014. This resulted in stakeholders within business units being unsure of the project’s critical path or their role in ensuring that it was met.

Recommendation 6

The Assistant Deputy Minister, Networks and End User should define the use of the matrix model for transformative projects such as the Email Transformation Initiative, including more formalized processes to ensure a more integrated and transparent enterprise wide project and resource plan throughout its lifecycle.

Management response:

SSC management agrees with this recommendation. SSC is currently restructuring along lines of service. Resources within a service line will work from planning, to design, to implementation, and finally to operations

The Risk Management Process

- We expected that a framework would be in place to ensure project risks had been appropriately identified, captured and reported and mitigation plans developed. This included project assumptions being robustly validated and, where required, captured as project risks with appropriate mitigation measures.

- The ETI had a documented risk management process and a risk register for the tracking of risks. A review of the risk register for the project execution phase indicated that many risks remained open and the mitigation measures had not been implemented. For example, the risk related to implementation impacts due to other SSC partner organization priorities and other SSC projects that impacted partner organizations, included mitigation measures related to early engagement with partners during the execution phase of the project. Furthermore, the weekly partner status summary for the week of December 20, 2013, indicated several issues or requests for Wave 1 partners that had yet to be addressed and may have caused further delays. These issues had not been captured in the risk register.

- The project risk register also had a number of closed risks. The Project Team indicated that as risks were identified they were closed in the risk register and then deemed to be issues. Issues were then captured and reported through project governance; however, comprehensive plans to deal with these issues, and to further minimize their ongoing risk to the project, were not observed as part of the project’s risk management activities. Examples of these closed risks included:

- Completion of the TBS CIOB Standard on Email Management. This risk was closed in August 2013; however, the standard had still not been finalized as of January 31, 2014; and

- The vendor may not be ready to deliver. This risk was closed as the risk was realized (i.e. the vendor requested a 12 week delay); however, the ongoing management of vendor (timing and quality of deliverables) remained an ongoing source of risk.

- Only a small number of high-level assumptions were captured in the Project Charter. A number of assumptions were either not captured in detail or were not robustly validated by the project. Therefore, they did not have accompanying risk management plans and have resulted in project issues, including:

- Assumptions related to carving out resources from current operational roles to just work on the project were not realized, as the expectations related to the ability to mobilize resources to come to the project were optimistic;

- The time required to complete detailed and sufficient SA&A activities were underestimated at the outset of the project;

- Assumptions related to the capacity of the vendor being able to provide change management and communication services without major involvement from SSC were not realized; and

- The ability of the project to perform data migration without requiring a more project-resource intensive server to server migration strategy.

- Risks related to those assumptions outlined above were not fully captured in the risk register.

- If assumptions are not robustly validated and documented, including determining and tracking the potential risks related to those assumptions and mitigation actions for those risks, this may cause additional project issues.

Recommendation 7

The Assistant Deputy Minister, Networks and End User should ensure that risk management activities capture all risks and issues and provide suitable mitigation plans against them.

Management response:

SSC management agrees with this recommendation. Risks and their desired mitigation are a core focus of ETI beyond the monthly reporting requirements to Treasury Board. ETI believes that proper risk identification and mitigation measures help to maintain project timelines, as solutions are identified and quickly put into place.

SSC’s Relationship with the Vendor

- We expected that the relationship with the vendor for such a large outsourcing deal would be structured as a partnership with shared benefits and risks, and that a vendor management plan would be developed for the management of the vendor after deployment.

- The contract between SSC and the vendor was complex. It outlined the requirements for the vendor related to the development and deployment of the ETI solution. Given its complexity, the Project Team indicated there was an expectation gap between the vendor and SSC, related to security requirements and deployment plans, and that SSC and the vendor continued to work through the acceptance of deliverables. This expectation gap resulted in delays in the project, to both the timing and quality of deliverables being provided by the vendor.

- The Project Team indicated the contract terms may have caused some of the issues outlined above. Based on the terms of the contract, the vendor was not paid until completing Operational Readiness Phase 1, and then again at the completion of Operational Readiness Phase 2. There were penalties associated with the vendor not meeting these deadlines; however, they were modest in comparison to the overall contract and when compared to penalties found in contracts of benchmarked successful projects of similar complexity.

- SSC had not yet determined where the central management function for the SSC-vendor relationship would be positioned in the Department. There were many component pieces of the vendor management function being developed, but no central group had overall accountability to oversee the various aspects of the relationship that was managed by different areas within the organization. The Project Team was currently working to determine how the vendor management program would be executed both during and after the transition from the project to the program phase. Without this structure, there was a risk that vendor relations may not be adequately managed. This was an important consideration not just for the ETI but for SSC as a whole, given the many other planned transformation projects that will require the management of outsourced vendors.

Recommendation 8

The Assistant Deputy Minister, Networks and End User should ensure that going forward, arrangements with outsourced vendors are more partnership oriented, including sharing of benefits and risks, especially for large and complex transformation projects.

Management response:

SSC management agrees with this recommendation. This recommendation will be taken into consideration in subsequent complex transformation projects. Procurement strategy is developed prior to request for proposal and is consistent with Government of Canada guidelines. For example, the Request for Information process gathers the industry's interest to partner with the Government of Canada and to share benefits and risks. Using the input gathered at this stage, SSC establishes its preferred procurement strategy for a particular project through a subsequent Request for Proposals.

Recommendation 9

The Assistant Deputy Minister, Networks and End User should develop the overall longer term vendor management program for the Email Transformation Initiative, including the identification of the lead unit responsible for the coordination of the program and the relationship with the vendor.

Management response:

SSC management agrees with this recommendation. A vendor management unit has been developed within SSC to implement a vendor management program for Your Email Service. A lead unit responsible for the coordination of the program and the relationships with the vendor has been identified. The management of the service provider contract was transferred under the DG, Horizontal Lead, Data Centre, in January 2015.

Conclusion

- Generally, the audit found that control practices related to the initiation and planning phase, as well as the first seven months of the execution phase of the ETI, had been implemented and were working as intended. Opportunities for improvement to strengthen project management and business process controls were noted in relation to project governance, resource management, risk management, readiness of partner organizations and vendor management.

- The identification of these opportunities for improvement provides valuable lessons for future transformation initiatives. However, if they are not addressed, they may cause further slippage of deployment dates, deficiencies in the solution or its related controls once it is deployed. This may impact the reputation of SSC as the provider of IT infrastructure services to the federal government, and prevent the achievement of the expected benefits from the ETI deployment, including cost savings already accounted for.

Management Response and Action Plans

Recommendation 1

The Assistant Deputy Minister (ADM), Networks and End User should ensure the Senior Assistant Deputy Minister Email Transformation Initiative (SADM ETI) Committee meets at least monthly during the critical phase of the project prior to the deployment of the solution, for key decisions related to scope, cost or risks. A Director General (DG) level committee should be used to analyse and potentially approve some project decisions.

| MANAGEMENT ACTION PLAN | POSITION RESPONSIBLE |

COMPLETION DATE |

|---|---|---|

| SADM ETI Committee met at least monthly during the critical phase of the project prior to the deployment of the solution, for key decisions related to scope, cost or risks. | ADM, Networks and End User | Completed in February 2014 |

| The ETI DG Steering Committee was established in February 2014. The Committee met on a bi-weekly basis to review and resolve ETI-related issues that could affect the schedule, cost or risks of the ETI project and it provided direction and decision-making. | DG ETI | Completed in February 2014 |

Recommendation 2

The Assistant Deputy Minister (ADM), Networks and End User should revisit the assumptions used in the calculation of the baseline costs and ensure a formal audit trail is retained.

| MANAGEMENT ACTION PLAN | POSITION RESPONSIBLE |

COMPLETION DATE |

|---|---|---|

| A rebaseline exercise of all transformation programs, including email, occurred in the fall of 2014. A revalidation of program transformation costs will be undertaken on a regular basis. | ADM, Networks and End User | Completed in September 2014 |

Recommendation 3

The Assistant Deputy Minister, Networks and End User should ensure the development of consolidated criteria for the go/no go decision for authorization and deployment.

| MANAGEMENT ACTION PLAN | POSITION RESPONSIBLE |

COMPLETION DATE |

|---|---|---|

| Service Authorization for Release 1.0 was approved based on the above-mentioned consolidated criteria (the five lenses). Service Authorization process includes steps to review these criteria by the Service Review Sub-Committee (SRSC) and, based on SRSC reviews a go/no go decision is recommended to the Chief Operating Officer for authorization and deployment of the relevant service release. | Director General, Email Transformation Initiative | Release 1.0 completed in February 2015 |

Recommendation 4

The Assistant Deputy Minister, Networks and End User should develop a more systematic approach to partner readiness, with the expected level of partner organization readiness tied to the migration schedule, and an escalation process when partner organizations have not met the expected level of readiness.

| MANAGEMENT ACTION PLAN | POSITION RESPONSIBLE |

COMPLETION DATE |

|---|---|---|

| Key to a more systematic approach to partner readiness has been the ability of SSC to better identify risks and deficiencies in partner readiness planning. Measures have been put in place for a more systematic approach including the implementation of a monthly Partner Readiness Dashboard, which began to be provided to the project in January 2014. From this dashboard, an escalation process to successive levels of authority is pursued to address outstanding partner implementation readiness issues. | Director General, Email Transformation Initiative | Completed in January 2014 |

Recommendation 5

The Assistant Deputy Minister, Networks and End User should ensure those lessons learned by SSC through the Wave 0 deployment, including the integration of applications and the resource requirements of deployment, are quickly communicated to Wave 1 partners. This needs to be supported by change management and communication material.

| MANAGEMENT ACTION PLAN | POSITION RESPONSIBLE |

COMPLETION DATE |

|---|---|---|

| Lessons learned by SSC Wave 0 migration are beginning to be shared with Wave 1 partners and other partner organizations. For example, a high-level debrief of SSC’s migration experience was provided to the Public Service Management Advisory Committee on March 27, 2015, as well as with non-partner organizations on April 7, 2015 (Heads of Information Technology Committee). The lessons learned being compiled also include the integration of applications (and the availability of the Control Test environment to test applications) and human resources/Service Desk resources requirements for migration preparedness. Each of these elements is being incorporated into change management and communications material, and will subsequently be posted on Email Transformation Initiative’s (ETI) GCPedia pages, and communicated with partner Project Managers as well. Lessons learned will be gathered from Waves 1, 2 and 3 and shared with partners as they prepare to migrate. | Director General, ETI | Completed in April 2015 |

Recommendation 6

The Assistant Deputy Minister (ADM), Networks and End User should define the use of the matrix model for transformative projects such as the Email Transformation Initiative (ETI), including more formalized processes to ensure a more integrated and transparent enterprise wide project and resource plan throughout its lifecycle.

| MANAGEMENT ACTION PLAN | POSITION RESPONSIBLE |

COMPLETION DATE |

|---|---|---|

| SSC’s realignment along lines of service will help the implementation of the matrix model, for the majority of the projects, by aligning resources of the team under the same branch. One of the key elements in implementing the matrix model is to clearly define direct and indirect reporting models. All contributing teams to a project will indirectly report to the Project Authority, with clear objectives and deliverables. In the matrix model management, performance objectives of individuals and teams will be established by consolidating objectives through the direct and indirect reporting responsibilities. | ADM, Networks and End User and Director General, ETI | June 2015 |

Recommendation 7

The Assistant Deputy Minister, Networks and End User should ensure that risk management activities capture all risks and issues and provide suitable mitigation plans against them.

| MANAGEMENT ACTION PLAN | POSITION RESPONSIBLE |

COMPLETION DATE |

|---|---|---|

| Risks are being managed following the Email Transformation Initiative (ETI) risk management process. Risks are being reviewed on a regular basis and mitigations are identified, tracked and communicated. The project conducts bi-weekly meetings to review and escalate project risks and identify, track and communicate proposed mitigations to the leadership team. As required, certain, more complicated risks, are presented successively to the ETI Director General (DG) Steering Committee, the Senior Assistant Deputy Minister ETI Committee, and the ETI Special Project Advisory Committee. | DG ETI | June 2015 |

Recommendation 8

The Assistant Deputy Minister (ADM), Networks and End User should ensure that going forward, arrangements with outsourced vendors are more partnership oriented, including sharing of benefits and risks, especially for large and complex transformation projects.

| MANAGEMENT ACTION PLAN | POSITION RESPONSIBLE |

COMPLETION DATE |

|---|---|---|

| When appropriate and based on future planned initiatives, SSC will ensure arrangements with outsourced vendors are more partnership oriented, including sharing of benefits and risks. This can be assessed during the Request for Information stages, leading to an eventual Request for Proposal. | ADM, Networks and End User | Ongoing |

| SSC has instituted a new senior management checkpoint for major procurement activities, which will allow a validation step in the process permitting senior management to assess the risks and assume any residual risks related to a particular procurement. This will allow for a continuous learning cycle. | ADM, Networks and End User | Ongoing |

Recommendation 9

The Assistant Deputy Minister, Networks and End User should develop the overall longer term vendor management program for the Email Transformation Initiative, including the identification of the lead unit responsible for the coordination of the program and the relationship with the vendor.

| MANAGEMENT ACTION PLAN | POSITION RESPONSIBLE |

COMPLETION DATE |

|---|---|---|

| A vendor management unit has been developed within SSC to implement a vendor management program and a lead unit responsible for the coordination of the program and the relationships identified. | Director General Horizontal Lead, Data Centre | Completed in January 2015 |

Annex A: Audit Criteria

The audit criteria and sub-criteria used in the conduct of this audit were based on project management standards (PMBOK, PRINCE2 and COBIT 4.1 PO 10) and leading practices of over 2,000 individual project management factors that have been grouped into the specific audit criteria below. These criteria and sub-criteria were agreed to by SSC management at the beginning of the audit examination.

Criterion 1 – Governance

We expected the ETI project governance structure to ensure that informed decisions were made, in the correct timeframes, by the appropriate individuals or groups, to ensure the success of the project during the initiation and planning phases.

- 1.1 Governance Approach – Appropriate approaches are used to provide governance to the project, including effective ongoing governance and steering committee involvement.

- 1.2 Accountability Model – Effective approaches are in place to allocate and manage accountability for the project, including accountability of the project manager.

- 1.3 Issue Management – There is effective control over issue management including tracking and resolution.

- 1.4 Role Management – Clear project roles are established and are effective for the nature of the project.

- 1.5 Benefits Management – Project benefits are clearly documented and an effective approach is in place to track against these benefits.

- 1.6 Budgets – The project budget is appropriately established, managed and controlled.

Criterion 2 – Ownership

We expected management to provide an appropriate level of organizational ownership and ensure project direction, including the clarity of objectives, were alignment to the organizational strategy.

- 2.1 Executive Support – Management provides sufficient oversight and is appropriately engaged and aligned with project delivery.

- 2.2 Direction – Effective mechanisms are in place to set and maintain project direction, including the clarity of objectives and alignment to organizational strategy.

Criterion 3 – Delivery Management

We expected appropriate controls to be implemented to ensure the project could deliver against its objectives, timelines and budgets through project plans and schedules during the initiation and planning phases of the project. This included ensuring appropriate mechanisms were implemented to ensure due diligence would be conducted prior to the new email solution being deployed within partner organizations.

- 3.1. Delivery – Effective mechanisms are in place to support the delivery of the correct project outcome.

- 3.2. Acceptance Model – An effective approach is in place to obtain acceptance from key stakeholders.

- 3.3. Design – Requirements are effectively identified and managed to support effective design of the final solution.

- 3.4. Planning – The project is effectively planned at a strategic level so that overall progress can be adequately tracked and course corrections can be made, as necessary.

- 3.5. Scheduling – Schedule management is effective; it is clear what tasks are required to achieve the project objectives, in what sequence and with what resources.

- 3.6. Managing Uncertainty – There is an effective approach to managing uncertainty and ambiguity.

- 3.7. Stakeholder Management – Stakeholders are effectively managed, including stakeholder both impacting and impacted by the project's activities.

Criterion 4 – Business Unit

Business unit in this context means the areas of the business within SSC that have a role in supporting the project. We expected to find a framework in place to ensure relevant business units provide adequate support to the project to support its effective delivery.

- 4.1. Business Unit Support – Relevant business units provide adequate support to the Project to support its effective delivery.

Criterion 5 - Resource Management

We expected that the roles and responsibilities for the ETI project have been clearly defined within SSC, and supported by a defined resource model, which included an integrated plan for engagement and use of resources across the enterprise.

- 5.1. Resource Selection – Project resources are appropriately planned and selected.

- 5.2. Resource Commitment – Project resources are appropriately committed to the project, particularly those deployed from business units.

Criterion 6 – Risk Management

We expected that a framework would be in place to ensure project risks had been appropriately identified, captured, reported, and mitigation plans developed. This included project assumptions being robustly validated and where required captured as project risks with appropriate mitigation measures.

- 6.1. Approach to Risks – The approach to manage risk is appropriate given the nature of the project.

- 6.2. Management of Risks – Risks, including political, stakeholder and reputational risks, are effectively identified and managed.

Criterion 7 – Contracting Approach

We expected that the relationship with the vendor for such a large outsourcing deal would be structured as a partnership with shared benefits and risks, and that a vendor management plan would be developed for the management of the vendor after deployment.

- 7.1. Contracting Approach – Approaches for selecting and contracting with external suppliers are appropriate to reduce risk and ensure alignment with SSC's priorities.

- 7.2. Vendor Management – Relationship with the vendor is appropriately managed to support effective project delivery.

Annex B: Summary of Predictive Project Analytics Results

Predictive Project Analytics (PPA) is a project risk management methodology based on a quantitative analytical engine. The predictive analytic database contains detailed information on over 2,000 successful projects. These projects vary by industry, type and size and reflect their unique complexities and drivers of success. By assessing select project details, a project can be analyzed against other successful projects in the PPA database with similar complexity characteristics. This analysis then indicates specific areas to address in order to most effectively increase the chances of a project’s success.

The complexity of a project is determined by profiling the project against a predefined set of 29 complexity factors related to one of five areas:

- Context;

- Technical;

- Social Factors;

- Ambiguity; and

- Project Management.

PPA assesses project complexity on a 10-point "Helmsman" scale, shown in the following table. The complexity scale is logarithmic, rather than linear, so the difference in complexity between a project with a rating of 9 versus a project with a rating of 8 is much greater than the difference in complexity between a project with a rating of 6 versus a project with a rating of 5.

The ETI project was rated as "High" complexity with a complexity rating of 7.3 on the 10-point scale.

| Helmsman scale | Difficulty level | Project characteristics |

|---|---|---|

| < 4 | Very minor | Business as usual type projects, normally not formalized by most organizations. |

| 4 – 5 | Minor | Generally well defined projects undertaken within a business unit. |

| 5 – 6 | Moderate | Generally core business projects that may be undertaken across several business functions and often have executive-level attention. |

| 6 – 7 | Somewhat high | Generally larger projects commonly undertaken across the organization. Normally have board-level attention. |

| 7 – 8 | High | Generally larger projects commonly undertaken across the organization and create a noticeable impact on the organization. Normally have board-level attention. |

| 8 – 9 | Very High | Larger projects that are ambiguous in nature are seldom undertaken across the organization and create a significant impact within the industry. Has board-level attention. |

| 9 – 10 | Exceptional | Largest projects rarely undertaken by organizations that may involve and impact multiple organizations. |

A summary of complexity across the five areas is outlined in Figure 1.

Figure 2 indicates that key factors driving the complexity of ETI include:

- Project Structure & Management

- Project Structure – the management structure of the project is complex as there are many levels of governance and stakeholders.

- Level of Accountability – the level of accountability is high as the accountability of the project is to an outcome.

- Technical Design

- Integration Complexity – there are many email systems (i.e. 63) that need to be integrated across 43 partner organizations.

- Ambiguity

- Risk – the risk level is high because project failure could limit key strategic opportunities for SSC.

- Stakeholders

- Number of Stakeholder Groups – there are many stakeholder groups involved in the ETI project.

Based on the assessed project project complexity as outlined above, the PPA Analytical Engine determined the level of control / performance necessary to achieve success across individual project management factors. The PPA Analytical Engine has a total of 172 different project management factors that have been determined to be key to the success of a project. These project management factors have been grouped under seven criteria:

- Governance;

- Ownership;

- Delivery Management;

- Business Unit;

- Resource Management;

- Risk Management; and

- Contracting.

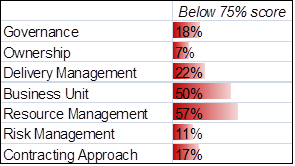

Overall PPA results assessed the actual level of control against the expected level of control (based on project complexity) across each criterion, as outlined in Figure 3.

In interpreting Figure 3, note that:

- The blue bar represents actual level of control, percentage (quartile) relative to successful projects within data base.

- Below 50% (lower 2 quartiles) indicates an increased risk of failure when compared with successful projects.

- Below 3rd quartile (75%) project will be considered underperforming and will likely have issues that will impede success.

- The red line indicates below 75% of successful projects is the minimum entry point to “concern” area.

- The black line represents average actual vs. expected level of control and is unique to each program/project that is profiled.

Note that within the seven criteria of PPA, all but the business unit criterion is operating at an overall level that is within the acceptable level of control; however, there were several control factors within each criterion which were assessed as not within the acceptable level of control. Figure 5 provides an overview of the percentage of control factors within each criterion that are below expected results.

The more granular results of potential control factor issues within the seven criteria can also be determined through further analysis of the more detailed graph by audit sub criteria that make up the left hand side of Figure 3. Based on the results as calculated by the PPA analytical engine, and outlined in Figure 3, the following audit sub-criteria (areas of project controls) are currently underperforming in relation to the expected level of control for the project:

- Role Management – In the context of the ETI project, these controls are underperforming given the contention related to the resourcing model for the project, between that of a traditional project team and the matrix model that is being sought to be utilized at SSC. Control factors scoring low for the project within this audit criterion included how roles between the Project technical team and the business unit are defined and distinguished.

- Business Unit Support – In the context of the ETI project, these controls are underperforming given that formally defining the roles and responsibilities between the project and operations for the project has been a work in progress, for example an integrated project work plan that includes operations was only being finalized as of January 31, 2014. Control factors scoring low for the project within this audit criterion included the tactical support the business provides to the Project.

Both of these areas of control relate to the observations under the resource model finding.

Other audit sub-criteria (areas of project controls) that are currently close to underperforming in relation to the expected level of control for the project are:

- Benefits Management – In the context of the project, this relates to further validating and documenting baseline costs for which project savings will be measured against. Control factors scoring low for the project within this audit sub-criterion included how are planned benefits documented. This relates to the baseline costs finding.

- Managing Uncertainty – In the context of the project, this relates to the need for more robust validation of project assumptions. Control factors scoring low for the project within this audit sub-criterion included what is the overall approach to managing uncertainty. This relates to the risk management process finding.

- Scheduling – In the context of the project, this related to the aggressive schedule for project deployment based on delayed and compressed timelines. Control factors scoring low for the project within this audit sub-criterion included what is the quality of the timings for tasks in the Schedule. This relates to the critical path items finding.

- Resource Commitment – In the context of the project, this relates to the need for SSC to further define the use of the matrix model, and ensuring integrated planning includes the Operations Branch. Control factors scoring low for the project within this audit sub-criterion included on what basis are internal staff resources allocated to the Project. This relates to the resource model finding.

- Approach to Risks – In the context of the audit, this relates to the need for the project to ensure that risks are appropriately documented and revisited to ensure appropriate mitigations measures are captured. Control factors scoring low for the project within this audit sub-criterion included how sophisticated is the risk management framework used by the Project. This relates to the risk management process finding.

Note that despite having a finding related to governance (the ETI execution phase governance structure); both the governance line of enquiry and the governance approach audit sub-criterion within that domain were assessed as operating within the acceptable level of control. This is understandable given that a comprehensive governance structure for ETI has been developed, that considers the roles and responsibilities of SSC senior management, central agencies (e.g. TBS), partner organizations and the vendor. Generally governance is performing well, with the exception of the need for the SADM ETI Committee to allow for further delegation of its decision making. Control factors scoring low for the project within the audit criterion of governance approach included how well is authority delegated/retained by the Steering Committee.

Note that there were two other findings (partner organizations and SSC’s relationship with the vendor) that although the audit sub-criterion related to these observations had scores that were within the acceptable level of control; there were individual control factors within these sub-criterion that scored below the acceptable range of control. For instance, the sub-criterion related to stakeholder management had a control factor related to the quality of the change management planning that scored outside the acceptable range of control, given the delays in engaging the partners and the development of change management material. Related to vendor management, those control factors that scored outside the range of acceptable control included: the degree to which the contract with the outsourced vendor had strategic alliance components (e.g. shared benefits and risks) and the extent to which metrics are used to measure vendor performance for project delivery.

Annex C – ETI Project Governance Model

Annex D – Key Acronyms

| Acronym | Full Term |

|---|---|

| ADM | Assistant Deputy Minister |

| CIOB | Chief Information Officer Branch |

| COO | Chief Operating Officer |

| DAEC | Departmental Audit and Evaluation Committee |

| DG | Director General |

| ETI | Email Transformation Initiative |

| IT | Information Technology |

| PCR | Projects and Client Relationships |

| PM | Project Manager |

| PPA | Predictive Project Analytics |

| SA&A | Security Assessments and Authorizations |

| SADM | Senior Assistant Deputy Minister |

| SRSC | Service Review Sub-Committee |

| SSC | Shared Services Canada |

| TBS | Treasury Board of Canada Secretariat |

| TSSD | Transformation, Service Strategy and Design |

Page details

- Date modified: